If you’ve been anywhere near UX, product, or tech, you’ve probably heard the golden rule: “You only need 5 users for usability testing.”

At first, it sounds counterintuitive. Just five? Surely, you’d need more. The more the merrier, right? More people, more data, more accuracy?

But if you’ve done any form of user testing before, this idea might ring true. You’ve probably seen how the same usability problems start showing up within the first few sessions.

However, explaining this to someone new to UX (especially a stakeholder clutching their Excel budget sheet or someone with a love for “robust sample sizes”) can be… a bit of a battle. Not everyone buys into the “just 5 users” philosophy. And to be fair, they’re not entirely wrong.

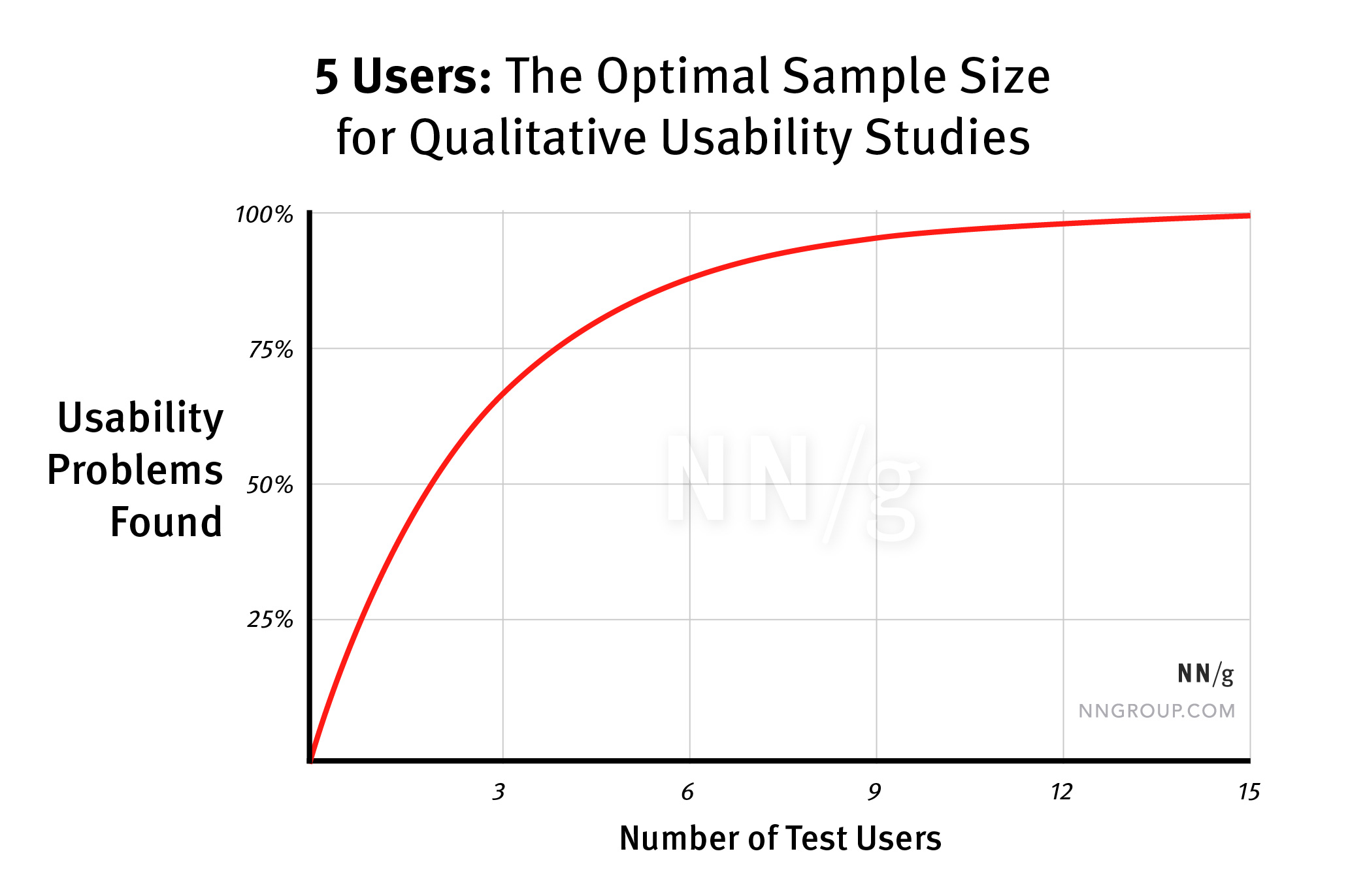

If you try to look up this claim, chances are you will be bombarded with probability formulas and graphs like the classic usability curve from Nielsen Norman Group. Yep, this one:

You Don’t Need 20 People to Tell You the Same Thing

Remember that soup analogy we posted a couple of weeks ago? You can check out the LinkedIn post here. Essentially, it goes like this: Picture trying a new restaurant. One spoonful of their soup, and it’s got you immediately thinking, “This is way too salty!“

If something’s obviously wrong, it doesn’t take 30 people to point it out. A few honest reactions will do the job. You don’t need a spreadsheet. You just know. That’s exactly how usability testing works. When a flow is confusing or a button’s doing something weird, most users will trip up, and they’ll tell you. Out loud. Repeatedly.

After testing with 5 people, you’ll usually hear the same feedback again and again. It’s not magic, it’s just… patterns. Humans are pretty good at spotting things that break their flow. And when something breaks it hard, it’s noticeable fast.

So, Why Do People Still Ask for 20+ Users?

Because there’s a difference between understanding what’s broken versus how bad it is, why, and for whom.

The five-user rule applies best to qualitative testing, when you want to find and fix usability issues quickly. But when your goal is quantitative, like benchmarking success rates, measuring time on task, or running preference tests, you do need more participants. That’s where statistical confidence comes in, and suddenly you’re looking at 20, 30, even 100+ users.

Also, context matters. Five users might be enough for testing a simple mobile app or landing page. But if you’re working on:

- A multi-step workflow with various user roles

- A global product spanning multiple cultures, languages, and devices

- A highly regulated enterprise tool with niche use cases

- Or a very niche audience

…then yes, you’ll probably need more sessions, more user types, and more rounds of testing. In those situations, even just recruiting the right kind of user can be half the challenge.

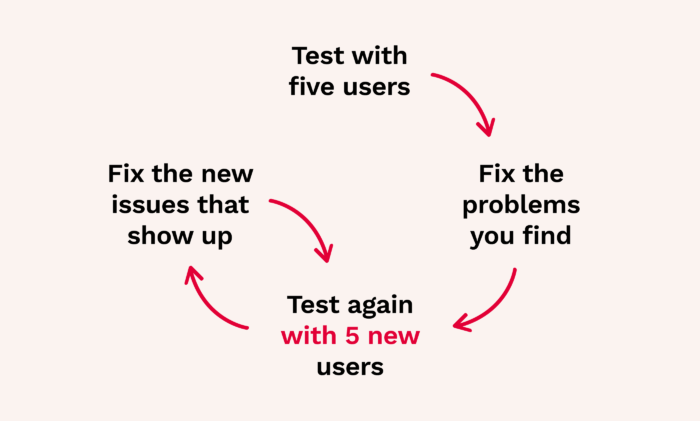

Usability Testing is an Iterative Sport

One of the most overlooked truths in usability testing is this:

It’s not about perfection. It’s about progress.

Jakob Nielsen said it best in his original article:

“The real goal of usability engineering is to improve the design, not just to document its weaknesses.”

What you really want to do is:

The real value in testing with 5 users isn’t just spotting problems, it’s deciding how to design around them. Tesler’s Law says there’s a basic amount of complexity of a system that doesn’t disappear: either you deal with it in the design, or you push it onto the user. Testing helps you catch when you’ve accidentally chosen the second option. This is how most good UX work happens. It’s not one big perfect test with 30 people; instead, it’s lots of quick, focused, iterative sessions.

Testing with fewer people isn’t about cutting corners; it’s about cutting through the noise. So, instead of spending the whole budget on a single massive test, break it up into smaller rounds. It allows you to uncover more, waste less, and probably save your sanity along the way.

It helps teams move. And honestly? Movement is what separates design theory from shipping real work.

Back to the Soup Analogy

Let’s say you don’t just want to know if the soup is salty, but how much salt is too much, and whether people from different cultures or dietary needs agree on that.

Now you’re asking a different question. That’s when you’ll need more users, because you’re looking for quantitative insights, not just what’s broken, but how many people it affects and how severely.

That’s when you shift gears. You start looking at variability. Cultural preferences. Taste profiles. Statistical deviations. (And suddenly you’re not in a restaurant anymore; you’re in a lab coat.)

Similarly, in UX research, there’s a difference between spotting a problem and understanding how widespread or critical it is.

That’s why you need to clarify your goals before every study:

- Are we finding issues?

- Are we validating design decisions?

- Are we proving ROI to stakeholders?

- Are we gathering numbers or narratives?

The Real Question

People often get stuck arguing about how many users to test with, when the real question should be: what are we trying to learn?

As a UX designer who’s worked in both lean, fast-paced environments and more structured enterprise settings, I’ve come to appreciate the beauty of “just enough.”

For early-stage design involving sketches, wireframes or basic clickable prototypes, five users are often more than enough to get directionally strong insights. In fact, testing with just three users sometimes gets the ball rolling. I’ve also tested with 20+ users when I had to. But by the time you hit participant ten, you’re usually hearing more of the same. That’s useful for validation, but not always necessary.

But as the design matures, as stakeholders get involved, and as the product becomes more complex or critical, expanding the pool makes sense. Context always wins.

When there’s push back like “Shouldn’t we test with more people?”, I don’t say “No, five is enough” because some research article said so. Instead, I try to shift the conversation to: “What are we trying to find out right now?”

If you’re trying to spot problems, keep it small. If you’re trying to measure outcomes, go bigger.

The five-user rule isn’t a myth. It’s a practical starting point that works – if you use it wisely.

At Friday, we’re all about getting the most out of each round of testing. Get in touch and let’s turn your questions into real insights to help you move your website forward.